The Moon Experience is an interactive and immersive virtual reality system based on the historic Apollo Program (1961-1972). The goal of this project is to demonstrate how to create an effective learning experience in a virtual space, which would otherwise be impossible to realize in the real world. The project draws upon technologies and approaches from multidisciplines. The virtual lunar world is established through virtual reality technology, which provides participants with immersive firsthand experiences. Computer game technology reinforces the effectiveness of the learning environment. Motion capture and computer animation facilitate real-time interactions between users and the system to sustain the sensation of being on the Moon. Learning principles and storytelling give the participant proper situated learning content. Providing a framework of narrative helps to heighten the audience’s perception, trigger their imagination, and transcend the virtual reality’s current limitations. |

||

| The Moon Experience from Cheng Zhang on Vimeo. |

||

|

||

|

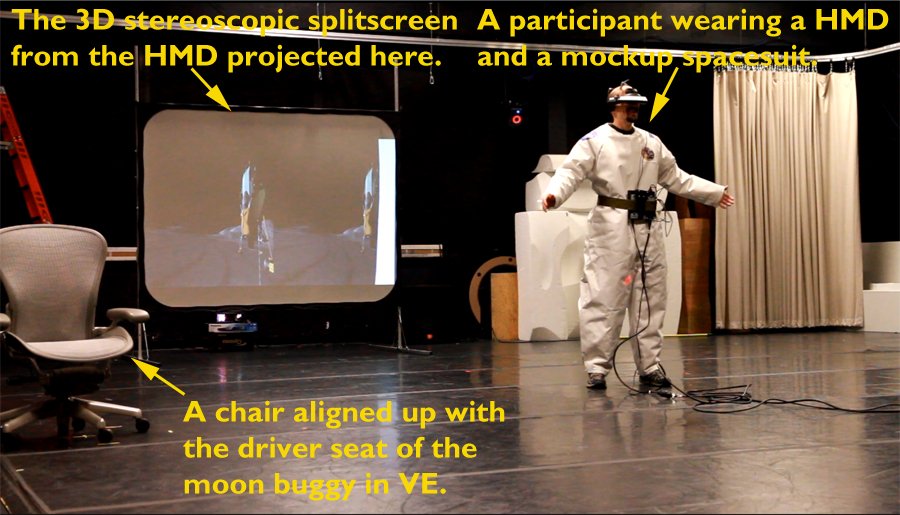

As in each real landing mission, there are two astronauts in the system. One is the virtual character, named Jack and the other is the avatar of a participant. Jack is a commander who is designed to play an instructor role. He provides the participant with all necessary information and guidance through his conversations with his guest. A participant enters the virtual world when putting on a Sony head mounted display (HMD). He/She also wears a mockup spacesuit and a wireless Lavalier microphone to communicate with Jack through radio. The user’s motion is tracked by the MOCAP system and his/her position is mapped into the virtual lunar world. An operator in the control room manipulates Jack's actions and controls the interactions between the system and the participant via a keyboard, a mouse, or a game controller.

|

||

|

||

|

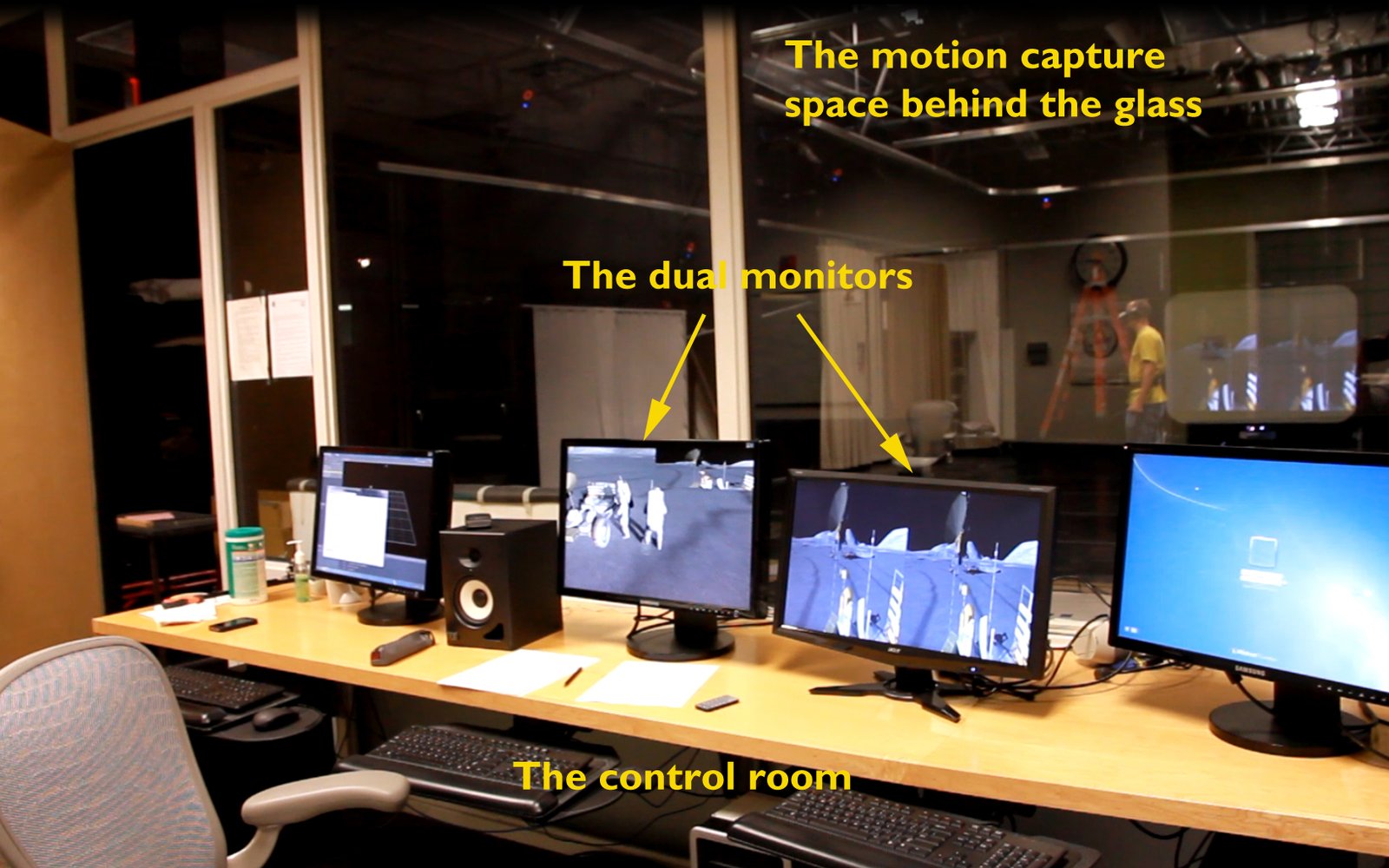

The configurations in the control room(left) and in the MOCAP space(right).

|

||

|

||

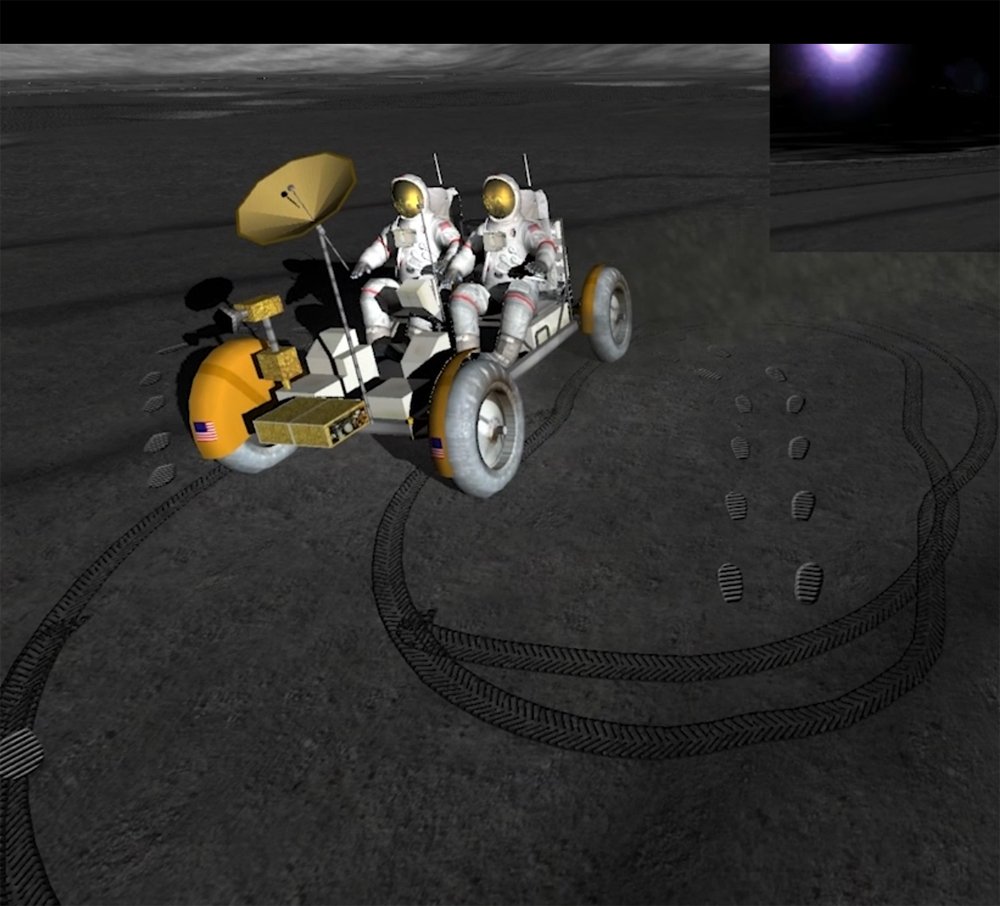

| The lunar virtual world consists of a lunar terrain, two astronauts, a lunar rover vehicle (LRV), a lunar module (LM), the Sun, the Earth, and the movements the previous objects create. I choose the landing site of Apollo 17 – Taurus-Littrow valley as the lunar terrain, and use its real digital elevation model (DEM) data to create the terrain through the terrain engine in Unity. |

||

|

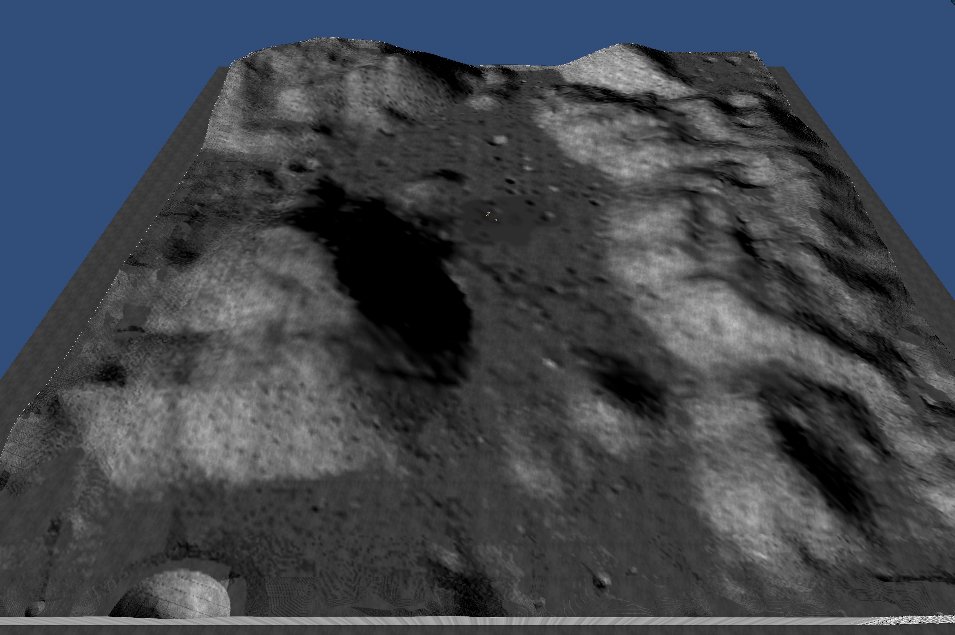

A bird's-eye view of Taurus-Littrow valley model in Unity.

A close-up view of the lunar terrain in Unity. |

||

|

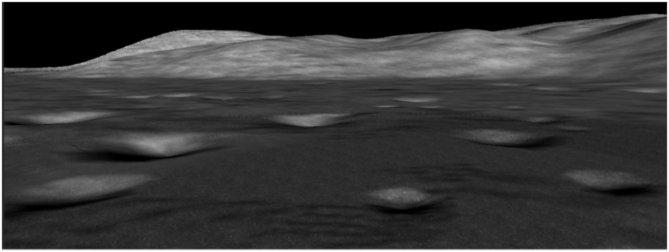

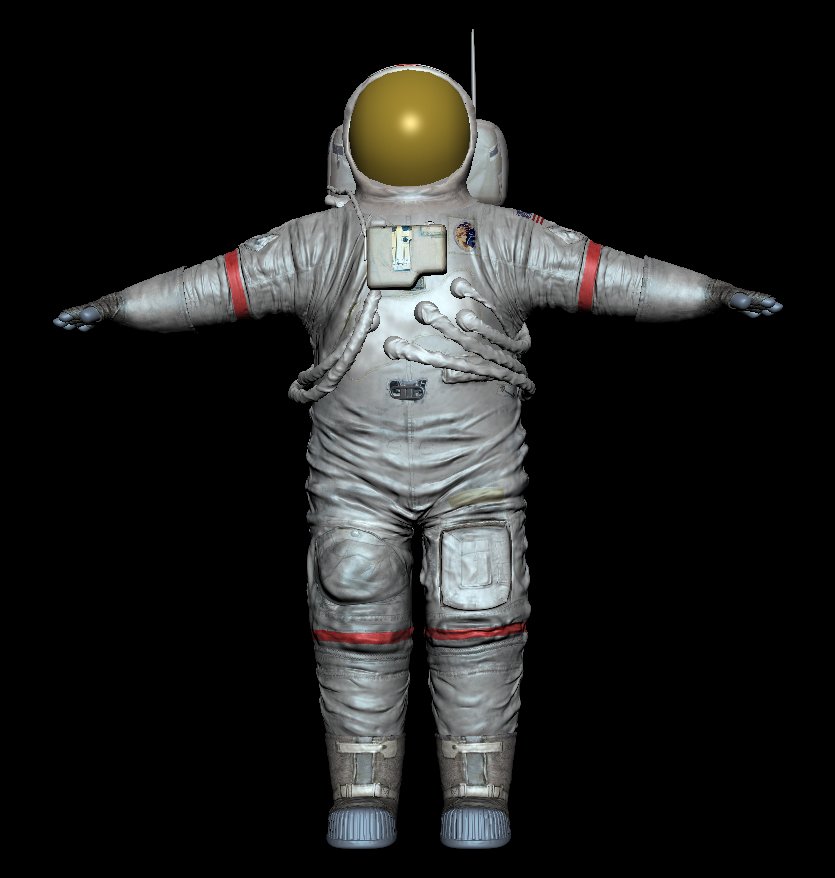

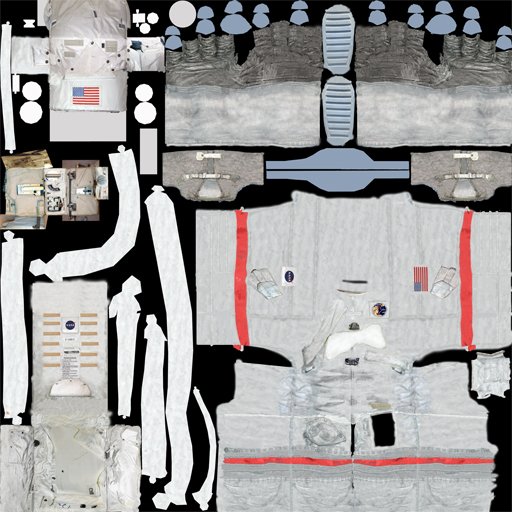

Since a Unity application runs in real-time, Unity has a limited upper bound of polygons for a model. On the other hand, I would like to have the astronaut be a detailed model to create a believable experience. To solve the potential conflict, I create a low-resolution model in Maya and sculpt a high-resolution one in Mudbox. Then, I use the high-resolution model to create a normal map for the low-resolution model. In Unity, I use the low-resolution astronaut model with the normal map and the color map. In this way, The Moon Experience can achieve a good performance while maintaining the realistic appearance of astronauts.

|

||

|

The low-resolution model with 5948 polygonal faces in Maya.

The high-resolution model with ~16 million polygonal faces in Mudbox.

|

||

|

||

The normal map generated from the high-resolution model. The low resolution model applied the normal map in Unity.

|

||

The color map. The hand model (click the image to see it in high-resolution).

|

||

|

The movements in the virtual space are created through animation in two ways. One is to use the Vicon Motion Capture system to record human motions and then map them into the virtual figure. Jack’s movements are created in this way (see the left image in the picture below). The other real-time animations are generated through scripts in Unity, including the motions of LRV, dusts, wheel tracks, and footprints.

|

||

|

||

|

Tom in the MOCAP suit was acting in the MOCAP space.

The real-time animations create the dusts, wheel tracks, and footprints.

|

||

|

||

| The Moon Experience has three narrative scenarios designed to engage the participant with familiar concepts about the moon, and to increase understanding by providing new information through direct experience:

While still allowing for spontaneous interaction, the linear story elements provide a design structure for the non-linear user experience. The narrative helps focus on the design and implementation germane to the three scenarios. The design of interfaces and interactions is theme-driven and user-centric based on the story. |

||

|

||

|

Interfaces in The Moon Experience include physical devices and those created in software.

The physical devices are the monitors, the keyboard, the mouse, the game controller, the Vicon Motion Capture system, the Sony HMD, the wireless Lavalier microphone, the props used in motion capture, and the mockup spacesuit. The mockup spacesuit is used to provide the user with the limited view, a closed and bulky feeling, and restrained movements.

|

||

|

||

|

||

|

Dual monitors are set up for the operator to have multiple views of the system. See the picture below - the left monitor has the operator view, the participant's view, and the operator control interface window; the right monitor has the 3D stereoscopic splitscreen in HMD.

|

||

|

||

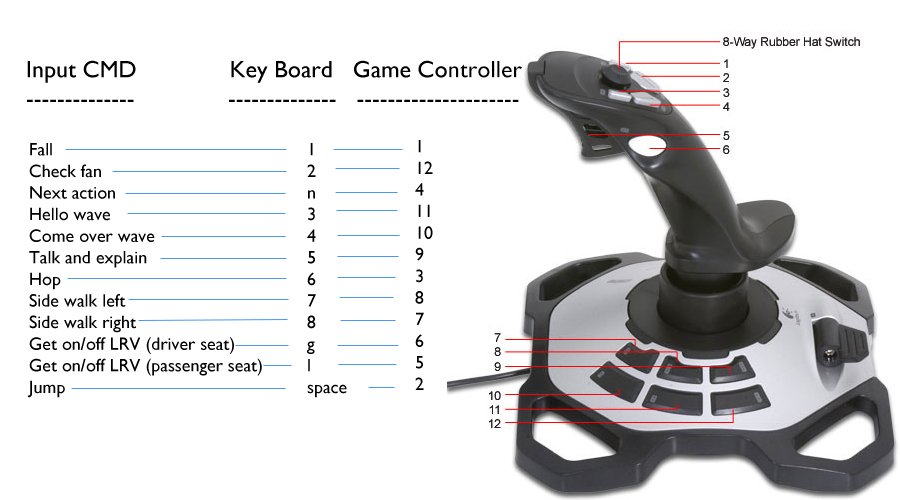

The operator can use a keyboard, a mouse, and a game controller to control the system. A participant can use a game controller to experience driving the moon buggy. Various input commands are mapped onto the keys of a key board and the buttons of a game controller. See the following image for the key-button mapping table. |

||

|

||

| The prop of a lunar rock and its counterpart in the virtual space. The key-button layout | ||

|

||

|

||

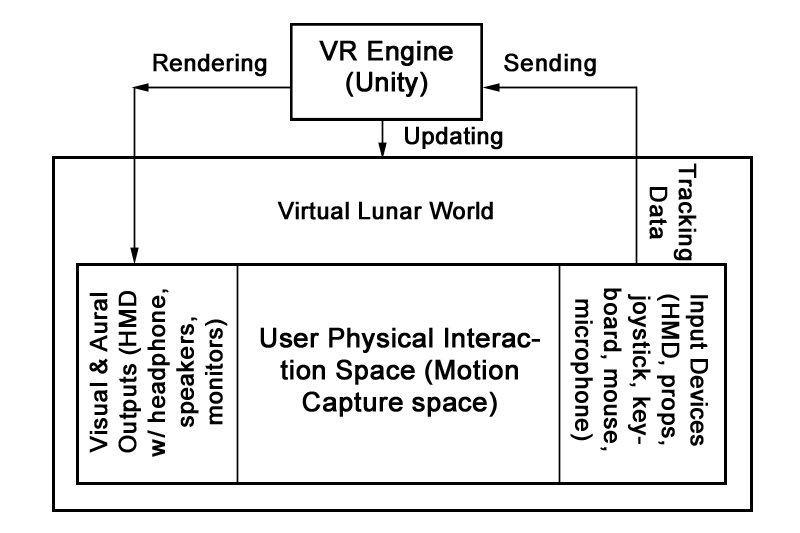

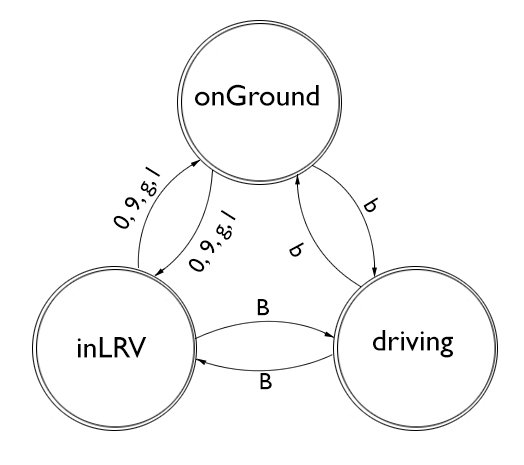

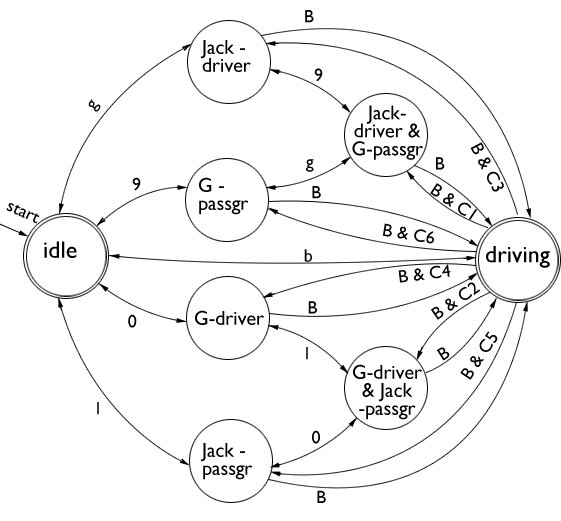

| The system structure. The three modes at the top level. The finite states in the inLRV mode. | ||

| In The Moon Experience, the user’s physical interaction space defined by the motion capture volume is registered in the virtual lunar world. The input devices track the user and monitor props in the physical space. The motion capture data is sent to the VR engine for updating real-time movements from the participant's point of view. The mechanics controls all actions and lays out the structure of the system. At the top level, there are three program modes: onGround, inLRV*, and driving. The Moon Experience must be in one of these three modes at any given time. In each mode, there are finite states to define detailed behaviors of the system (see the right image above). |

||

*inLRV refers to the state that a participant is in the vehicle but not in driving mode yet. |

||

|

||

| Motion capture actor – Tom Heban (MFA student in Digital Animation and Interactive Media at Design Department) Voice actors – Trent Rowland (undergraduate student from Theatre Department) as "Jack", Tom Heban as "Houston Control Center." Costume designer (spacesuit) – Samantha Kuhn (MFA student in Costume Design at Theater Department ). |

||